Volume 11 - Year 2024 - Pages 44-50

DOI: 10.11159/jbeb.2024.006

Enhancing Minimally Invasive Spinal Procedures Through Computer Vision and Augmented Reality Techniques

Jingwen Hui, Songyuan Lu, Eric Lee, and Frank E. Talke

University of California San Diego, Center for Memory and Recordings Research

3164 Matthews Ln, La Jolla, California, USA 92122

jwhui@ucsd.edu; sol025@ucsd.edu; esl003@ucsd.edu; ftalke@ucsd.edu (corresponding author)

Abstract - Surgical navigation technologies have transformed the healthcare landscape, playing a key role in enhancing precision, safety, and efficiency of various medical procedures. In this paper, we present a cost-effective 3D computer navigation approach that leverages augmented reality (AR) and computer vision (CV) to aid in spinal pain management procedures like radiofrequency ablation (RFA) and epidural steroid injections (ESI). We evaluate the accuracy of AR overlay of key anatomical features on the patient (obtained from MRI scans or CAD models of the patient) through simulated spinal injection experiments and assess the accuracy of the CV algorithm via optical tracking experiments. Our findings indicate that AR and CV are valuable tools for surgical guidance, offering a low-cost alternative to traditional fluoroscopy.

Keywords: Augmented Reality, Computer Vision, Biomedical Devices.

© Copyright 2024 Authors This is an Open Access article published under the Creative Commons Attribution License terms. Unrestricted use, distribution, and reproduction in any medium are permitted, provided the original work is properly cited.

Date Received: 2024-06-07

Date Revised: 2024-10-05

Date Accepted: 2024-10-15

Date Published: 2024-11-22

1. Introduction

Surgical procedures depend on precise localization of instruments relative to anatomical features in order to optimize accuracy and safety of various operations. Traditionally, most healthcare facilities rely on fluoroscopy, the usage of X-rays to provide real-time visualization of the area of interest during procedures [1]. Fluoroscopy is employed in spinal surgery for procedures involving radiofrequency ablation (RFA) and epidural steroid injection (ESI) [2]. In RFA, heat is delivered via a radiofrequency needle to targeted nerves. The heat destroys the targeted nerves and prevents the pain signals from reaching the brain, thereby offering long-term pain relief [3]. On the other hand, ESI involves the injection of steroids or anti-inflammatory medication into the spine to treat inflamed nerve roots [4]. Despite their consistency and precision, both techniques require the use of fluoroscopy for the guidance of needle placement, leading to radiation exposure of patients and medical staff. Radiation exposure could cause minor skin irritations as well as more serious problems like organ damage and cancer [5]. In addition, fluoroscopy systems cost between $250,000 and $800,000, leading to significant economic barriers for small to mid-size healthcare providers [6].

In recent years, augmented reality (AR) has demonstrated new possibilities for surgical navigation by enhancing visualization of internal anatomy without the need for fluoroscopy [7]. For instance, Molina et al. [8] demonstrated the effectiveness of AR in localizing bones and soft tissues in live patients during surgery.

In parallel, optical tracking devices have emerged as an indispensable tool for boosting surgical precision. Tracking systems identify key anatomical features relative to surgical equipment, allowing for safer and more accurate trajectories of surgical instruments [9]. For instance, optical tracking devices have been used to assist with the localization of the “Scottie Dog”, the L5 vertebrae, during epidural steroid injections [10]. State-of-the-art optical systems typically use infrared stereoscopic cameras to capture reflective markers, allowing for 3D localization of instruments and anatomical features [11]. For depth perception, stereo cameras utilize two cameras and compare images of slightly different perspectives. Despite these advancements, optical tracking systems still face significant challenges due to their high cost and difficulty of implementation in operating environments where invasive skin markers are needed [12].

In this paper, we introduce the use of a low-cost optical tracking system with AR overlay of key anatomical features on the patient (from MRI scans or CAD models of the patient) for spinal pain management surgeries. By conducting comprehensive AR experiments on a simplified spinal model together with CV tests on a prototype marker tracking system, we have simulated operating room conditions and anatomical complexities to assess the feasibility of such a system in real-world operating room scenarios.

The Use of CV and AR In Back Pain Management

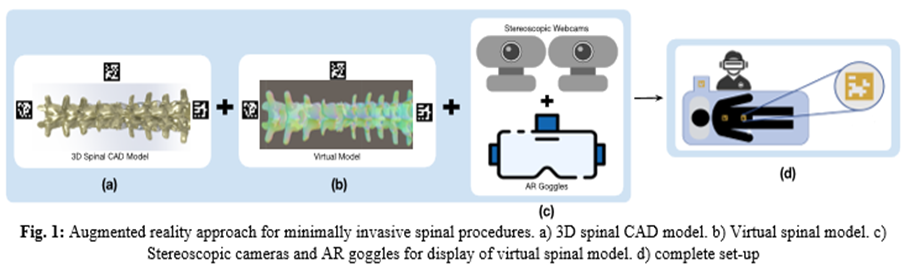

To show the feasibility of AR and CV in back pain management, we have first created a 3D model of the surgical region of interest (from MRI scans or CAD models of the patient) and converted this 3D model into a virtual model. We then displayed this virtual model onto the surgical region of interest using augmented reality goggles and fiducial markers (ArUco markers) on the patient’s spinal position. Thus, when viewing the surgical region of interest via AR, the surgeon sees not only the surface of the patient but also a 3D virtual anatomical model of the patient’s spine below the surface. To speed up the development time, we have generated the virtual spinal model in this paper using 3D printing and computer-aided design (CAD) rather than MRI images. Employing CV algorithms and ArUco markers to track the patient position, we have overlayed the virtual image of the patient’s vertebrae on the patient using augmented reality. A schematic of our approach is shown in Figure 1. Here, the CAD model shown in Fig. 1a is converted into a virtual model shown in Fig. 1b. The position of the patient is tracked in real-time using ArUco markers, stereoscopic cameras (Logitech C920x HD Pro Webcams) and Vuforia, a 3D AR software development kit (Fig. 1c). The schematic of the complete system is shown in Fig. 1d, including the surgeon with AR goggles, the patient, and the ArUco marker registrations.

In the following sections, we will present separate proof-of-concept experiments for augmented reality (AR) and optical tracking. In future work, we plan to integrate both technologies into a unified prototype and conduct mock surgeries by a trained physician to test the viability and practicality of the prototype for real-world surgical applications.

2. Materials and Methods

The following sections are divided into two parts—Augmented Reality and Optical Tracking—to clearly distinguish the respective experiments.

Augmented Reality (AR)

The AR approach used in this paper eliminates the dependence on fluoroscopy during surgical navigation in pain management. To evaluate the benefits of this approach over traditional methods, we have performed experiments to study the reliability and success rate of finding a targeted location on a specially designed spinal model with and without the use of AR.

2.1 Spinal Model Design

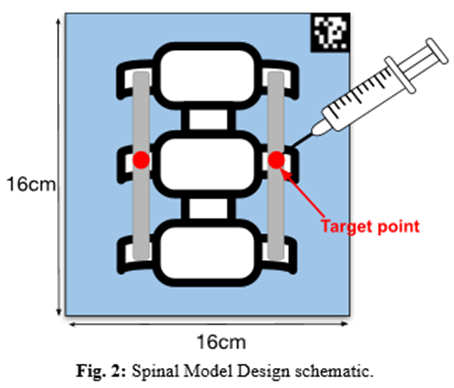

In Fig. 2 we show the schematic of the spinal model used for the evaluation of AR in spinal surgeries. The model consists of a 3D-printed stereolithographic spine segment with three vertebrae, encased in a 160mm x 160mm x 100mm ballistic gel rectangular prism. The model was marked at two locations on the center vertebrae to serve as target points during needle insertion (Fig. 2). A feedback detection mechanism was implemented to indicate if the needle was placed within the target region during an experiment. The operator would aim with the tip of the needle to the target points (center red dot) which represent the medial branch nerve, a common target in pain management procedures [8]. During experiments, the model was positioned vertically, with its top surface covered to restrict the operator’s vision of the model.

2.2 Tracking

We have used an AR 3D platform (Unity) to build the AR environment. An AR 3D software development package (Vuforia) was used for the detection of the ArUco markers. The open-source spine model that was used to construct the physical model was converted to an AR object in Unity. The position of the model was tracked in real-time using stereoscopic cameras (Logitech C920x HD Pro Webcams) coupled with Vuforia, which tracks the position of the ArUco marker and overlays a virtual spine model with respect to the ArUco marker’s position.

The AR environment and the overlaid model were viewed through a commercially available headset (Meta Quest 3, Meta Platforms) utilizing the Quest Link feature which allows the Quest 3 to connect to a Windows PC and run the AR program that we created from Unity to display the simulation. The overlaid AR model provides the medical doctor with visual augmentation that displays the virtual spine model during experiments.

2.3. Experimental Setup/Detection Feedback

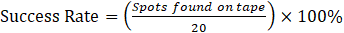

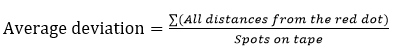

A total of 40 insertion trials were performed, 20 using AR guiding and 20 using visual guiding (without fluoroscopy or AR). A successful trial is defined as one in which the needle strikes the 15mm wide tape on the middle vertebrae, leaving a mark. We analyzed the success rate and average deviation from the target for both sets of trials using Eqs. (1-2).

Optical Tracking

The AR package we have used for our initial tracking experiments, Vuforia, is not specifically designed for ArUco markers. To further improve the system accuracy to a level comparable to fluoroscopy, it is crucial to develop custom tracking algorithms tailored to register the ArUco markers. We chose to use OpenCV for Unity to develop our algorithm because OpenCV has specialized functions that target the tracking of ArUco markers [13,14]. Also, the optical tracking system we develop in this paper deviates from the conventional infrared stereoscopic camera setup which requires invasive markers and specific operating environments. To assess the precision of our tracking system, we tested our tracking algorithm with a specially designed X-Y ground truth platform which shows the true X and Y position in the real-world [15].

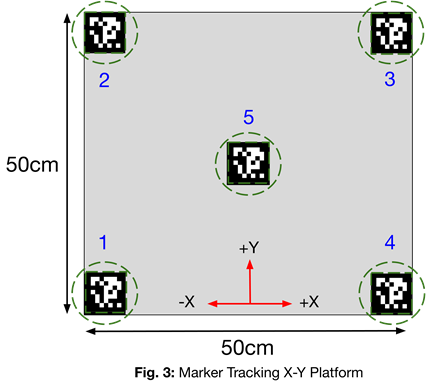

2.4 Ground Truth Platform

Fig. 3 demonstrates the platform from the bird’s eye view. This platform is 50cm by 50cm and testing markers are placed in the center and at the four corners. Tracking experiments were conducted with stationary markers in the X-Y plane. Future experiments will include marker tracking during motion in all X-Y-Z directions to simulate subtle movements of patients during spinal procedures.

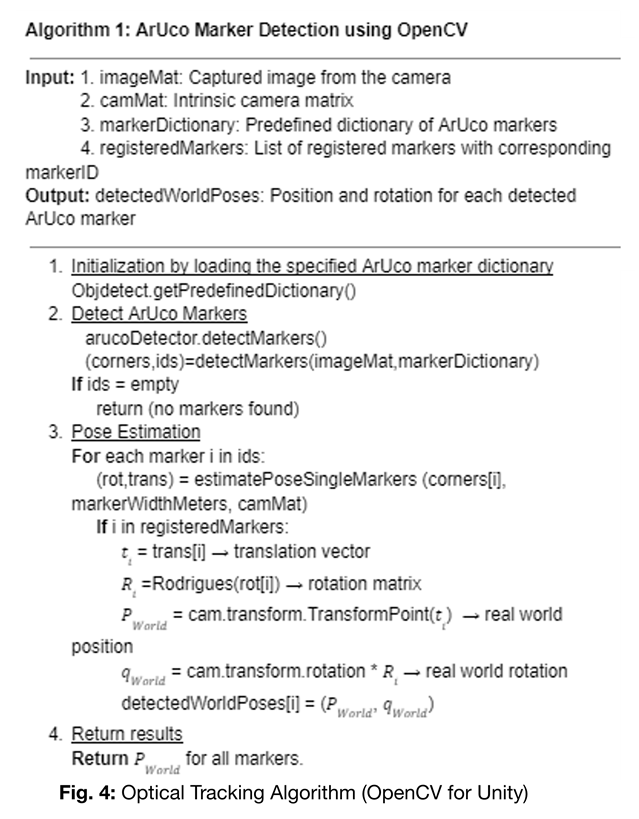

2.5 Tracking Software/Algorithm

We show the optical tracking algorithm used to detect ArUco markers on our platform in Fig. 4. We used OpenCV for Unity’s ArUco marker detection functions for optimal results. This algorithm is integrated with stereoscopic cameras.

As shown in Fig. 4, our algorithm begins with the initialization of data structures and dictionaries. After the stereoscopic camera captures an image, the algorithm identifies the ArUco markers and estimates their 3D pose using intrinsic parameters (i.e. focal distance matrix and camera center coordinate) [16]. Once registered, the algorithm performs pose estimation and calculates real-world position and rotation of each marker. To ensure robustness, the algorithm employs noise-cancelling and error-correction mechanisms to handle ambiguous cases.

2.6 Experimental Setup

We conducted tracking experiments with five ArUco markers placed at the corners and the center of the platform (labelled according to Fig. 3). Given that the center ArUco marker is assumed to be at the coordinate origin (0 cm, 0 cm), the exact positions of the other markers can be easily determined using the dimensions of the platform. We anchored a stereoscopic camera directly above the center ArUco marker. With the implementation of the optical tracking algorithm detailed in Fig. 4, the camera captures 10 real-time tracking results for each point. We averaged the results of each point, transformed the OpenCV coordinates to real world coordinates, and compared these calculated real world coordinates with the exact real-world coordinates obtained from the X-Y platform to calculate the tracking error. For each dimension (X,Y), we report the point of the maximum error and the deviation defined as

3. Results

Augmented Reality (AR)

We have divided our AR results into two sections to assess the success rate and average deviation distance of our AR system (Table 1).

Table 1. Experimental Success Rate and Average Deviation with AR and without AR system

|

Trials |

Without AR System |

With AR System |

|

Success Rate |

45% |

85% |

|

Average Deviation |

0.51cm |

0.28cm |

Table 1 shows that the use of the AR system increases the experimental success rate from 45% to 85%, and reduces the average deviation from 0.51 cm to 0.28 cm.

Optical Tracking

We show the (X,Y) coordinates (already transformed to real world coordinates) of our optical tracking results in comparison with the actual real-world coordinates (Table 2).

Table 2. Experimental coordinates of ArUco markers versus Actual coordinates of ArUco markers

|

Markers |

Experimental (X,Y) in cm |

Real-World (X,Y) in cm |

|

1 |

(-25.0, -25.0) |

(-25.0,-25.0) |

|

2 |

(-25.0, 25.0) |

(-25.0,25.0) |

|

3 |

(24.1, 25.3) |

(25.0,25.0) |

|

4 |

(25.3,-24.3) |

(25.0,-25.0) |

|

5 |

(0.0,0.0) |

(0.0,0.0) |

Table 2 shows the experimental and real-world coordinates for ArUco markers 1 to 5. We observe that experimental coordinates are very close to the real-world coordinates for all five markers, showing a maximum error of 0.9 cm.

Table 3. Maximum Deviation for X and Y Directions

|

Maximum Error |

Deviation (cm) |

|

X – Direction (Marker 3) |

0.9 |

|

Y – Direction (Marker 4) |

0.7 |

Table 3 shows the maximum deviation for the system, with a maximum error of 0.9 cm in the X direction and 0.7 cm in the Y direction. This demonstrates the accuracy of our optical tracking system. Further optimization can reduce the error margin even further.

4. Discussion and Conclusions

In this paper, we conducted two separate but related experiments with the purpose of demonstrating the potential of the use of AR and optical tracking in minimally invasive spinal procedures. These two studies are the basis for a fully integrated, low-cost 3D navigation system utilizing AR and CV.

We have studied the potential of augmented reality (AR) because it serves as an affordable and radiation-free alternative to fluoroscopy. We built a $600 AR system with open-source modelling files and publicly available software and studied its accuracy on a 3D vertebrae spinal model. Our system demonstrated a significant increase in surgical accuracy and a lower average deviation distance compared to experiments performed without AR assistance. In addition, the use of AR is an attractive alternative for healthcare facilities because it provides a safer environment for both patients and medical professionals by reducing radiation exposure in comparison to fluoroscopy. Current inaccuracies in the AR overlay, including slight trembling, result from insufficient anchoring with a single ArUco marker. Additionally, the lack of integration of the OpenCV algorithm into our AR setup further contributes to these imperfections. Future research is needed to achieve near-fluoroscopy accuracy. We will start by combining our optical tracking algorithm with our AR technologies and experimenting with multiple ArUco markers in various locations. Then, we plan to improve accuracy by developing trackable surgical instruments to help visualization and positioning of the surgical area of interest and instrument tips. Also, we plan to implement recurrent neural networks (RNN) due to their error minimization potential [16] and reduce deviation below 0.14 cm, the clinically accepted error of margin in spinal pain management procedures.

We have evaluated our optical tracking system using ArUco markers and a specifically designed X-Y platform. This algorithm was developed using OpenCV for Unity, an open-source package designed to enable computer vision tasks within Unity. Our algorithm was designed to improve the accuracy of marker tracking in various environments (such as different lighting condition, room environment, etc). The experimental results show that our system achieved a maximum deviation of 0.9 cm in the X direction and 0.7 cm in the Y direction. Although these findings show the reliability of our tracking system, there are future steps that can be taken to improve the tracking and simulate real-world settings. In particular, implementation of the so-called “blob extraction method” and of two-dimensional prediction techniques will enhance system robustness and accuracy under surgical constraints [18]. In addition, inclusion of convolutional neural network (CNN) should be studied to reduce tracking noise [19,20]. These additions will overcome the current inaccuracies which are results of random noise. Finally, future experiments should include tracking of ArUco markers moving in three dimensions (X,Y,Z) to simulate patient behavior.

The separate studies of AR and optical tracking demonstrate the feasibility of AR and optical tracking for surgical navigation with ArUco markers. These low-cost technologies minimize the radiation exposure of traditional spinal procedure approaches while enhancing surgical visualization. Future work will integrate our AR system with our own optical tracking algorithms to result in a system that will further enhance surgical efficiency for spinal pain management procedures.

5. Acknowledgements

The authors would like to thank Dr. Farshad Ahadian from the Center for Pain Medicine at the University of California, San Diego, Ananya Rajan from the University of California, San Diego, Center for Memory and Recording Research, and Darin Tsui from the School of Electrical and Computer Engineering, Georgia Institute of Technology, for their interesting discussions and suggestions during this research.

References

[1] D. L. Miller, "OVERVIEW OF CONTEMPORARY INTERVENTIONAL FLUOROSCOPY PROCEDURES," Health Physics, vol. 95, no. 5, pp. 638-644, Nov. 2008. View Article

[2] R. Silbergleit, B. A. Mehta, W. P. Sanders, and S. J. Talati, "Imaging-guided injection techniques with fluoroscopy and CT for spinal pain management," Radiographics: A Review Publication of the Radiological Society of North America, Inc, vol. 21, no. 4, pp. 927-939; discussion 940-942, Jul. 2001. View Article

[3] L. E. Leggett, L. J. J. Soril, D. L. Lorenzetti, T. Noseworthy, R. Steadman, S. Tiwana, and F. Clement, "Radiofrequency Ablation for Chronic Low Back Pain: A Systematic Review of Randomized Controlled Trials," Pain Research and Management, vol. 19, no. 5, pp. e146-e153, 2014. View Article

[4] B. Benny and P. Azari, "The efficacy of lumbosacral transforaminal epidural steroid injections: A comprehensive literature review," Journal of Back and Musculoskeletal Rehabilitation, vol. 24, no. 2, pp. 67-76, May 2011. View Article

[5] L. Wagner, "Radiation injury is a potentially serious complication to fluoroscopically-guided complex interventions," Biomedical Imaging and Intervention Journal, vol. 3, no. 2, Apr. 2007. View Article

[6] G. M. Malham and T. Wells-Quinn, "What should my hospital buy next?-Guidelines for the acquisition and application of imaging, navigation, and robotics for spine surgery," J. Spine Surg., vol. 5, no. 1, pp. 155-165, Mar. 2019, doi: 10.21037/jss.2019.02.04. View Article

[7] Aya Taghian, M. Abo‐Zahhad, M. S. Sayed, and A. H. Abd, "Virtual and augmented reality in biomedical engineering," Biomedical Engineering Online, vol. 22, no. 1, Jul. 2023. View Article

[8] C. A. Molina, N. Theodore, A. K. Ahmed, E. M. Westbroek, Y. Mirovsky, R. Harel, E. Orru, M. Khan, T. Witham, and D. M. Sciubba, "Augmented reality-assisted pedicle screw insertion: a cadaveric proof-of-concept study," Journal of Neurosurgery: Spine, vol. 31, no. 1, pp. 139-146, Jul. 2019. View Article

[9] A. Conger, T. Burnham, F. Salazar, Q. Tate, M. Golish, R. Petersen, S. Cunningham, M. Teramoto, R. Kendall, and Z. L. McCormick, "The Effectiveness of Radiofrequency Ablation of Medial Branch Nerves for Chronic Lumbar Facet Joint Syndrome in Patients Selected by Guideline-Concordant Dual Comparative Medial Branch Blocks," Pain Medicine, vol. 21, no. 5, pp. 902-909, Oct. 2019. View Article

[10] M. T. Sqalli, B. Aslonov, M. Gafurov, N. Mukhammadiev, and Y. S. Houssaini, "Eye tracking technology in medical practice: a perspective on its diverse applications," Front. Med. Technol., vol. 5, Nov. 2023, doi: 10.3389/fmedt.2023.1253001. View Article

[11]: K. Patel, P. Chopra, S. Martinez, and S. Upadhyayula, "Epidural Steroid Injections," StatPearls [Internet], Treasure Island (FL): StatPearls Publishing, Jan. 2024. [Online]. Available: View Article

[12] M. A. de Souza, D. C. A. Cordeiro, J. de Oliveira, M. F. A. de Oliveira, and B. L. Bonafini, "3D multi-modality medical imaging: Combining anatomical and infrared thermal images for 3D reconstruction," Sensors, vol. 23, no. 3, p. 1610, Feb. 2023, doi: 10.3390/s23031610. View Article

[13] Z. Phillips, R. J. Canoy, S.-h. Paik, S. H. Lee, and B.-M. Kim, "Functional near-infrared spectroscopy as a personalized digital healthcare tool for brain monitoring," J. Clin. Neurol., vol. 19, no. 2, pp. 115-124, Mar. 2023, doi: 10.3988/jcn.2022.0406. View Article

[14] J. Simon, "Augmented reality application development using Unity and Vuforia," Interdisciplinary Description of Complex Systems: INDECS, vol. 21, no. 1, 2023, doi: 10.7906/indecs.21.1.6. View Article

[15] J. Shah, P. Pandey, T. Kamat, and P. Tawde, "The integration of OpenCV and Unity for the development of interactive educational game," International Journal of Scientific Research in Computer Science, Engineering and Information Technology, vol. 9, no. 4, pp. 425-431, Jul.-Aug. 2023, doi: 10.32628/IJSRCSEIT. View Article

[16]: D. Tsui, K. Ramos, C. Melentyev, A. Rajan, M. Tam, M. Jo, F. Ahadian, and F. E. Talke, "A low-cost, open-source-based optical surgical navigation system using stereoscopic vision," Microsystem Technologies, vol. 2024, May 28, 2024. [Online]. Available: https://link.springer.com/article/10.1007/s00542-024-07984-7 View Article

[17] : OpenCV Developers, "Detection of ArUco markers," OpenCV Documentation, OpenCV, [Online]. Available: View Article

[18] A. H. Khan, S. Li, D. Chen, and L. Liao, "Tracking control of redundant mobile manipulator: An RNN based metaheuristic approach," Neurocomputing, vol. 400, pp. 272-284, Aug. 2020. View Article

[19] P. Lau, S. Mercer, J. Govind, and N. Bogduk, "The Surgical Anatomy of Lumbar Medial Branch Neurotomy (Facet Denervation)," Pain Medicine, vol. 5, no. 3, pp. 289-298, Sep. 2004. View Article

[20] M. Ribo, A. Pinz, and A. L. Fuhrmann, "A new optical tracking system for virtual and augmented reality applications," IMTC 2001. Proceedings of the 18th IEEE Instrumentation and Measurement Technology Conference. Rediscovering Measurement in the Age of Informatics (Cat. No.01CH 37188), Budapest, Hungary, 2001, pp. 1932-1936 vol. 3, doi: 10.1109/IMTC.2001.929537. View Article

[21] C.-Y. Chong, "An Overview of Machine Learning Methods for Multiple Target Tracking," 2021 IEEE 24th International Conference on Information Fusion (FUSION), Sun City, South Africa, 2021, pp. 1-9, doi: 10.23919/FUSION49465.2021.9627045. View Article